Asynchronous VR Collaboration

WHEN:

September 2018 - April 2019

WHAT:

Independent Study in Computer Science

WHERE:

University of British Columbia, BC, Canada

TOOLS:

September 2018 - April 2019

WHAT:

Independent Study in Computer Science

WHERE:

University of British Columbia, BC, Canada

TOOLS:

- Unity

- HTC Vive

- Affinity Diagramming

- Content Analysis

- Running Usability Studies

The Asynchronous VR Collaboration project is one of the ongoing HCI research projects within the Multimodal User Experience Lab. This research specifically seeks to identify how users utilize multimodal expressions, such as speech, body language and scene manipulation, when reviewing and creating VR recordings.

Special thanks to Dr. Dongwook Yoon, Dr. Cuong Nguyen and Kevin Chow!

Special thanks to Dr. Dongwook Yoon, Dr. Cuong Nguyen and Kevin Chow!

September 2018

Transcriptions & Deictic Gestures Analysis

Assisted in performing post-task interview transcriptions.

Catalogued instances of deictic gesture use from video data, indicating the referent, appoximate distance of reference, and the keyword utilized.

Catalogued instances of deictic gesture use from video data, indicating the referent, appoximate distance of reference, and the keyword utilized.

October - December 2018

Thematic Content Analysis

Performed qualitative analysis using thematic coding techniques on post-task interview transcriptions. The analysis served to cross-validate results from other research assistants and to identify new concepts previously buried.

Identified 147 concepts, and aggregated them into 24 themes through multiple iterations.

Identified 147 concepts, and aggregated them into 24 themes through multiple iterations.

January 2019

Conceptual Design

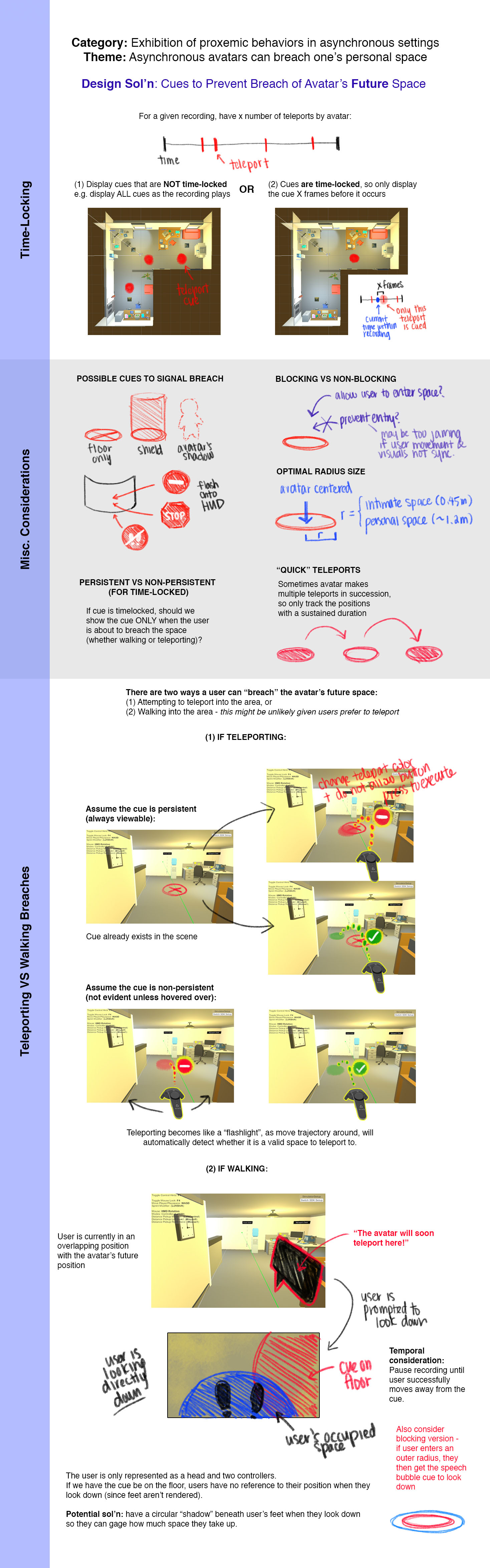

Extracted requirements from the content analysis to guide the conceptual design.

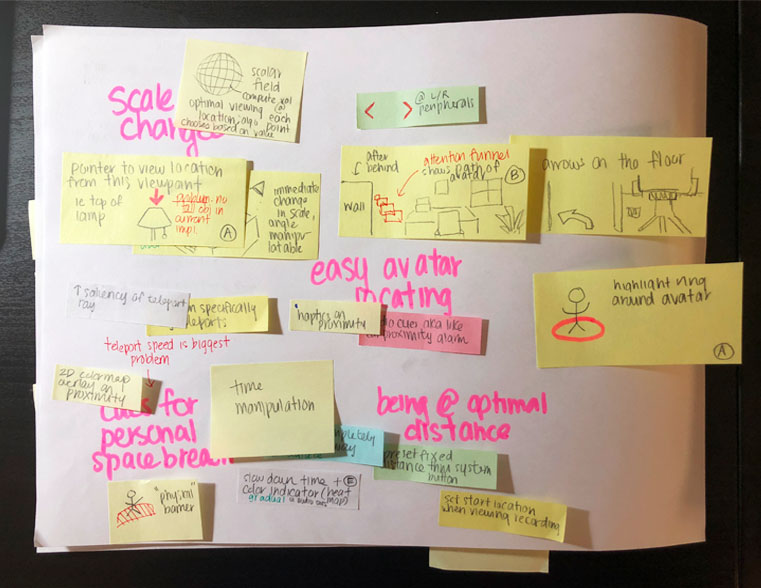

Using affinity diagramming, began brainstorming potential design techniques, drawing from design implications in collaborative VR research.

Using affinity diagramming, began brainstorming potential design techniques, drawing from design implications in collaborative VR research.

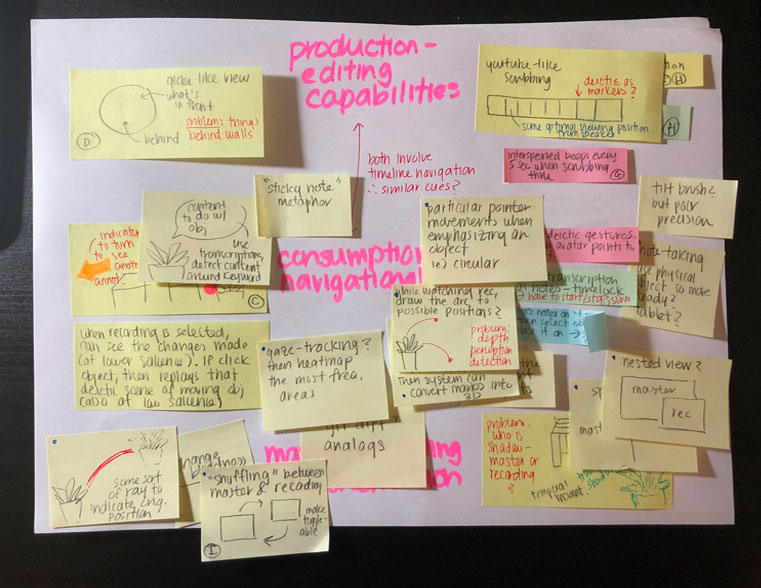

Continued ideation using tablet-drawn annotations superimposed on image stills.

Produced more thorough documentation on PROs and CONs for each potential design.

Produced more thorough documentation on PROs and CONs for each potential design.

Narrowed down primary design directions, produced more detailed conceptual designs and storyboarded them.

February - March 2019

POC Development

I was responsible for implementing three of the four POC features into the existing system. They were:

- Collaborator Aware X-Ray Vision: renders the occluded avatar and any objects within the POV of the avatar.

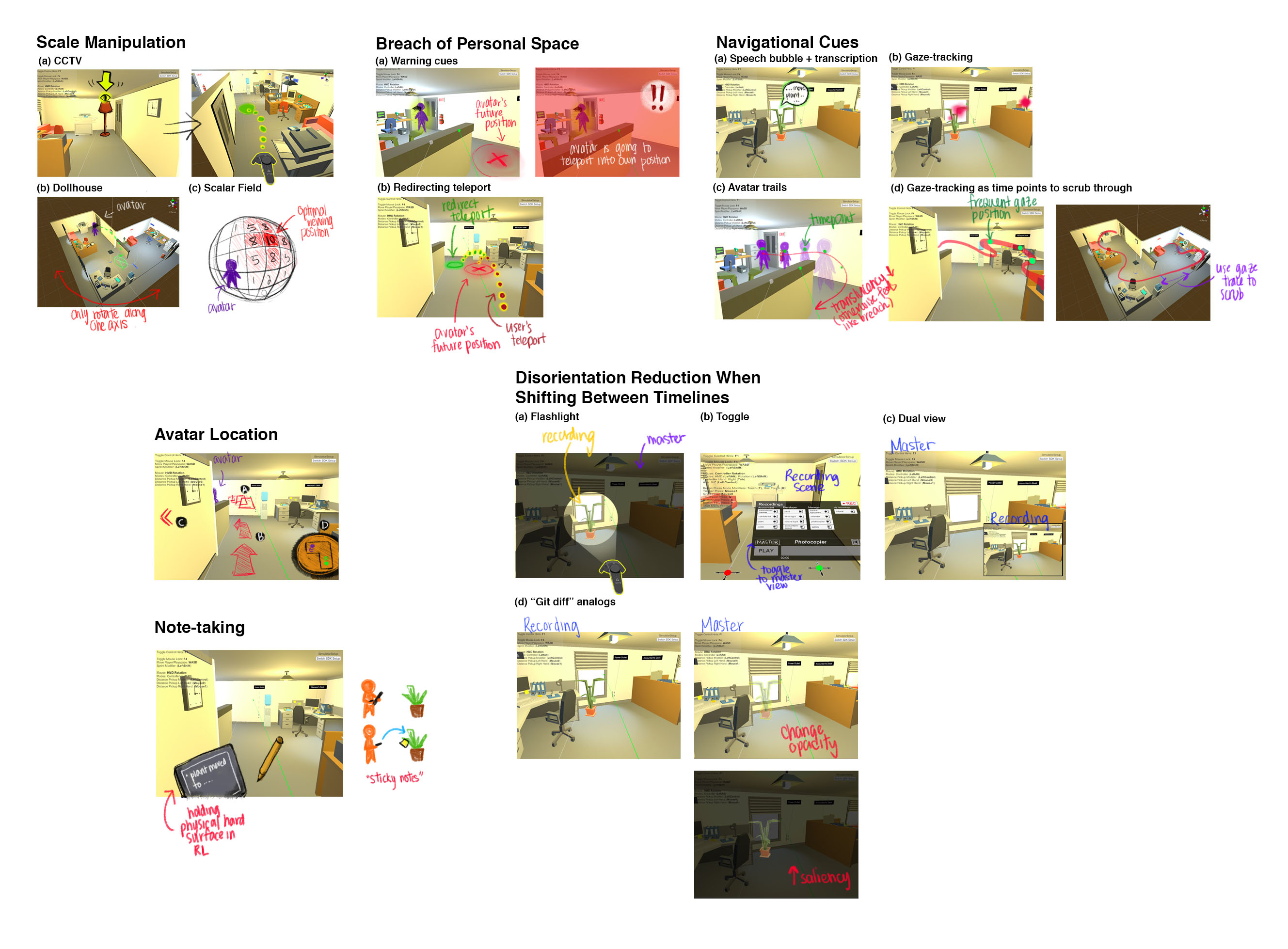

- Animated Scene Transitions: animates the changes performed between the current timeline, and that of a selected recording's timeline (analogous to "git diff" or how you would see code changes on github).

- Proxemic Sensitive Teleportation: renders the avatar in its future anticipated space when the user attempts to perform a teleportation, and redirects the teleportation arc so that the user's position does not overlap with that of the avatar.

March - April 2019

Running Usability Studies

I ran a series of usability studies to assess our four POC features. Participants were asked to perform a set of tasks, once with and once without the assistance of the feature.

Conclusions:

My work has contributed to our paper Challenges and Design Considerations for Multimodal Asynchronous Collaboration in VR, which was accepted into ACM CSCW 2019!